Docker and Kubernetes 101

All you need to stay confident while using docker and kubernetes

Before understanding what is Docker and its uses, let's try to get to know a few terminologies.

OS Kernel:

it is responsible for interacting with the underlying hardware. Operating Systems like Ubuntu, CentOS, and Fedora will have their OS Kernel(Linux in this case)and a set of applications to run.

Virtual Machine(VM) Vs Docker:

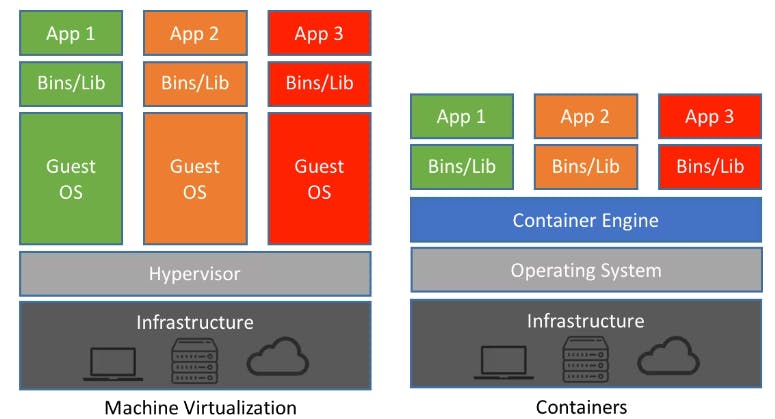

in the case of VM: we have Underlying hardware -> Hypervisor -> then separate VMs will be installed on top of Hypervisor, each VM will have its OS, Applications, Libraries and dependencies.

- VM virtualizes at the OS level

In the case of Docker: we have Underlying hardware-> OS -> Docker Installation/Container engine -> and then Containers. Here Containers just have Applications, Libraries and dependencies with a shared os.

- Docker virtualizes at the application level

What is Docker :

It is a tool for running applications in an isolated environment similar to a virtual machine but with a much lighter weight

If our app works in one will work in another too. because the app environment(libraries/needed binaries) is the same across all places.

Image:

It is a package/template. This package is portable, meaning it can be easily transferrable within teams (Dev to QA). It is used to create one or more containers. Containers are running instances of images where they have their isolated environment and set of processes. we usually have layers in an image. Most of the images have a Linux base image (alpine 3.10) as the base image. On top of the base layer image, we have an application layer image.

Image: An application packaged together with the needed configuration, dependencies and a start script

It is an artifact that can be moved around

Containers

Simply put the container is running an instance of an image. A container provides a runtime environment for an image, meaning when we run an application it needs certain storage for storing logs, configuration files and environment variables etc. The container provides a virtual filesystem for this purpose. Containers will be stored in the container repository. Most probably this repository is private to companies or it can be a public repository for Docker containers called Docker Hub

Containers are completely isolated environments, they can have their processes/services, network interfaces and their mounts just like VM except they all share the same OS kernel.

The container facilitates the file system to store application logs for the application that runs inside of a container. Here file system is virtual in the container.

every container will have a port to talk to the application that runs inside the container.

All containers will have a port bound to them for talking with another container

Before containers:

Developers used to install required software for their application directly on the underlying OS. Suppose you would like to pass the application to other members/teams you need to provide them with your project with all needed dependencies and configuration in an instruction file. Again It would be different for different Operating Systems. It is tedious for setting up all from scratch.

After Containers:

Developers no longer needed to install required software directly on OS, because containers have their isolated layer of OS. Containers package an application with all needed dependencies and configuration. once packaged, it will be put into the Container repository. Now Other developers can easily install the whole application with one docker command. guess what! The same single command can be used for any OS.

Container port vs Host port

As we get to know the container is just a running instance of an image. it is a kind of vm that is running on a host.

multiple containers can run on our host machine, and our host machine has a certain number of ports available. if we were to run the same image with multiple versions, it is possible through docker. Here when we run docker images say postgres:10 version and postgres latest version both use container port 5432. It is allowed in a container environment to have the same port for multiple containers, as the individual container has their isolation from each other. But if you would like to access both in your host machine you have to bind them with a unique port for each container.

while running the docker image you can specify like below. Here -name represents the container name, -e for the environment variable, -d is to run the container in detached mode.

docker run -p5432:5432 --name latestPostgreswithPort -e POSTGRES_PASSWORD=pass -d postgres

Docker Network

docker creates its isolated network where the container is running when we deploy two containers within the same network they can communicate by using the container name, as both containers are in the same n/w. Here no IP and port are needed.

command

docker network lsshows you a list of networks. similarly, thedocker network create nw_namecreates a new isolated network.If we wanted to run images on this network, we will have to specify this newly created network id/name at the time of running an image using the command

docker run postgres -p5432:5432 -d --name postgres --net n/w_name

Docker compose :

for running an image we may need to specify many command line arguments like a set of flags/environment variables, our command length will be more and if we have more such images to run it is tedious for us to specify all through commands. To ease this process we have docker-compose. It's a tool for running multi-container applications on docker. With Compose, you use a YAML file to configure your application's services.

if you use docker-compose for multiple containers deployment by default they will be put in the same network.

a sample docker-compose yaml file looks like the one below. We can run it using

docker compose -f app.yaml up

version: "3.7"

services:

app:

image: node:12-alpine

command: sh -c "yarn install && yarn run dev"

ports:

- 3000:3000

working_dir: /app

volumes:

- ./:/app

environment:

MYSQL_HOST: mysql

MYSQL_USER: root

MYSQL_PASSWORD: secret

MYSQL_DB: todos

mysql:

image: mysql:5.7

volumes:

- todo-mysql-data:/var/lib/mysql

environment:

MYSQL_ROOT_PASSWORD: secret

MYSQL_DATABASE: todos

volumes:

todo-mysql-data:

Docker file:

It's a blueprint for creating a docker image. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image.

It is in a format of

Instruction ArgumentEvery docker image should be based on another image/os. All docker files must start with

FROMInstruction.Then a

RUNinstruction instructs an argument to run any Linux commands on the particular base image from aboveFROMCommand.we can have multiple

RUNcommands. All the RUN commands will be executed inside the container.we can copy our local files/Source code to the docker image using

COPYInstruction.finally, we need to specify an

ENTRYPOINTinstruction is used to set an executable that will always run when the container is initiated.For building a docker image from the docker file we use two arguments in the docker build command. 1. image name(-t appName:version), 2. location of docker file.

docker build -t my-first-app:1.0 /home/apps

Docker volumes:

Docker volumes are used for persisting data generated by and used by Docker containers. For databases and other stateful applications, we may need to use volumes.

Let's assume our host machine has a container and each container has a virtual file system linked. when you restart/stop a container all the data in the file system will be recreated. This is where docker volumes help us.

to get the persistence of data we just mount our physical/host file system onto the container. It is a kind of shared directory b/w two hosts, if the data is modified in the virtual file system from the container, it will be reflected on the physical file system too. We specify volume along with the run command,

docker run -v host-file-system-path:container-virtual-fs-pathwe have 3 types of volumes.

Host volumes,

Anonymous volumes.

Named volumes.

Docker Commands:

docker run: for running a container from an imagedocker run nginxwill run an instance of nginx application on the docker host.

Basic:

docker ps: will list all running instances of docker containers.docker ps -a: will list all running and stopped instances of docker containers.docker stop: will be used to stop the running container. need to provide container name/IDdocker rm: will be used to remove the exited container just to avoid occupying extra memory on the discdocker images: to see a list of images available locally.docker rmi:used to remove images from our local repo.docker rmi <imagename>docker pull: just loads the image into our local repo.docker run: will pull and run the image.docker exec -it <containID> /bin/bash: will be used for executing docker commands on the docker containerexitis used to exit from the running containerdocker run -d: here flag -d makes the container run in the background/detached mode.docker run postgres:10: here 10 is a tag specifying the version of Postgres that we would like to run.docker attach detachedContainerID: will make the background container run in the foreground.docker run -i someImage: Here-iparameter for specifying to run the image in an interactive mode.docker inspect <Container ID>: to know more details about container like internal IP(host), image, etcdocker run -v /your/home:/var/jenkins_home jenkins: this is to persist data between stop/rerun Jenkins, to make all configuration data persist, we need to map a volume to the container while running.

Docker tag:

docker tag SOURCE_IMAGE[:TAG] TARGET_IMAGE[:TAG]

This command just creates an alias (a reference) by the name of the TARGET_IMAGE that refers to the SOURCE_IMAGE. That’s all it does. It’s like assigning an existing image another name to refer to it. Notice how the tag is specified as optional here as well, by the [:TAG]

Kubernetes:

It is an Open source Technology developed by google to make orchestrating containerized applications easier. The official definition of Kubernetes is a Container Orchestration tool. Containers are based on Kubernetes

It's a Production-Grade Container Orchestration where it manages automated container deployment, scaling, and management.

helps us to manage containerized applications in different deployment environments.

What is the need for a container orchestration tool or Kubernetes

trend shift from the monolith to microservices.

increased usage of containers.

Demand for managing hundreds of containers properly in different environments.

What these container orchestration tools offer.

high availability of applications. or having no/minimal downtime

Scalability or high performance.

Disaster recovery: backup and restore

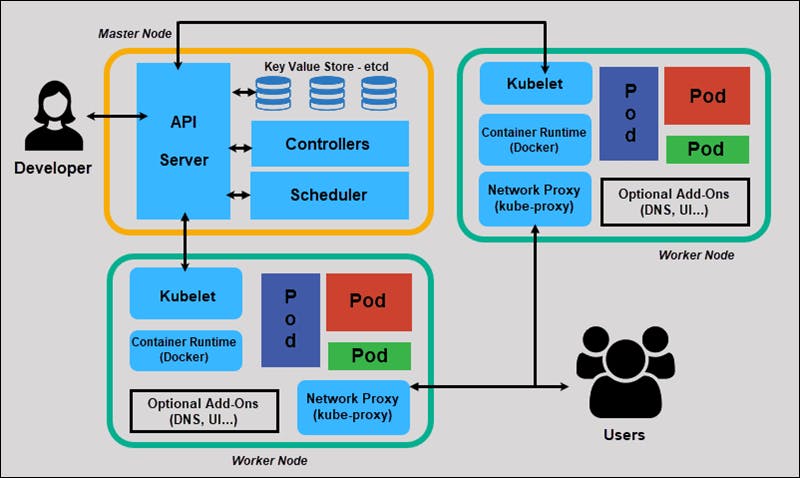

Kubernetes architecture

Pods

- pods are the smallest unit of deployment in Kubernetes as the container is the smallest unit of deployment in Docker. To understand easily, we can compare pods are nothing but lightweight VM in the virtualization world. Each pod consist of one or more containers. Pods are ephemeral as they come and go and as the containers are stateless, pods do not maintain states. Usually, we run a single container inside a pod. There are some scenarios where we run multiple containers inside a single pod which are dependent on each other. Each time a pod spin up, they get a new IP address with a virtual IP range assigned by the pod networking solution.

Helm is a Kubernetes deployment tool for automating the creation, packaging, configuration, and deployment of applications and services to Kubernetes clusters

- it's a package manager for Kubernetes

kubectl

it is a command line utility through which we can communicate or instruct the Kubernetes cluster to carry out a certain task.

With this, we can control the Kubernetes cluster manager. There are two ways we can instruct the API server to create/update/delete resources in the Kubernetes cluster. The imperative way and a declarative way.

If you are just getting started then you can begin with an imperative way of doing it. But, in a production scenario, it is best practice to use a declarative way. Behind the scene, kubectl translates your imperative command into a declarative Kubernetes Deployment object.

Namespace:

In Kubernetes, namespaces provide a mechanism for isolating groups of resources within a single cluster

Namespaces are a way to divide cluster resources between multiple users (via resource quota).

Names of resources need to be unique within a namespace, but not across namespaces.

Chart

Helm uses a packaging format called charts.

A chart is a collection of files that describe a related set of Kubernetes resources. A single chart might be used to deploy something simple, like a memcached pod, or something complex, like a full web app stack with HTTP servers, databases, caches, and so on.

persistent volume(PV) vs persistent volume claims(PVC)

persistent volume (PV) is the "physical" volume on the host machine that stores your persistent data. A persistent volume claim (PVC) is a request for the platform to create a PV for you, and you attach PVs to your pods via a PVC.

Pod -> PVC -> PV -> Host machine

PVC is a declaration of the need for storage that can at some point become available/satisfied - as inbound to some actual PV.

It is a bit like the asynchronous programming concept of a promise. PVC promises that it will at some point "translate" into storage volume that your application will be able to use, and one of the defined characteristics like class, size, and access mode (ROX, RWO, and RWX).

Miscellaneous

Docker is an implementation of containers

The library is the default docker repository

The underlying host where docker is installed is called docker host or docker engine.

If we do not specify any tag, docker assumes to pull/run an image that is with the latest tag.

Whenever docker builds, it caches each layer so when the rebuild is required, it just uses cached layers and performs actions on modified layers only.

docker.io is the docker's default registry for storing images. (docker.io is dns for docker hub also known as docker's default repository). Similarly, we have google's registry gcr.io, AWS registry is ECR and for Azure, it is ACR(Azure Container Registry)

Pods are the smallest unit of deployment in Kubernetes and container is the smallest unit of deployment in Docker